The ultimate multi-monitor setup with the Framework 16

The Challenge: Replacing Desktops with a Laptop

Replacing a desktop with a laptop isn't quite straightforward, especially when you're used to work with 3 monitors at all times and also enjoy a bit of gaming now and then. However, I found a solution: if I could replace both desktops with an external GPU (eGPU), a powerful laptop could fill all those gaps.

The Ultimate Build

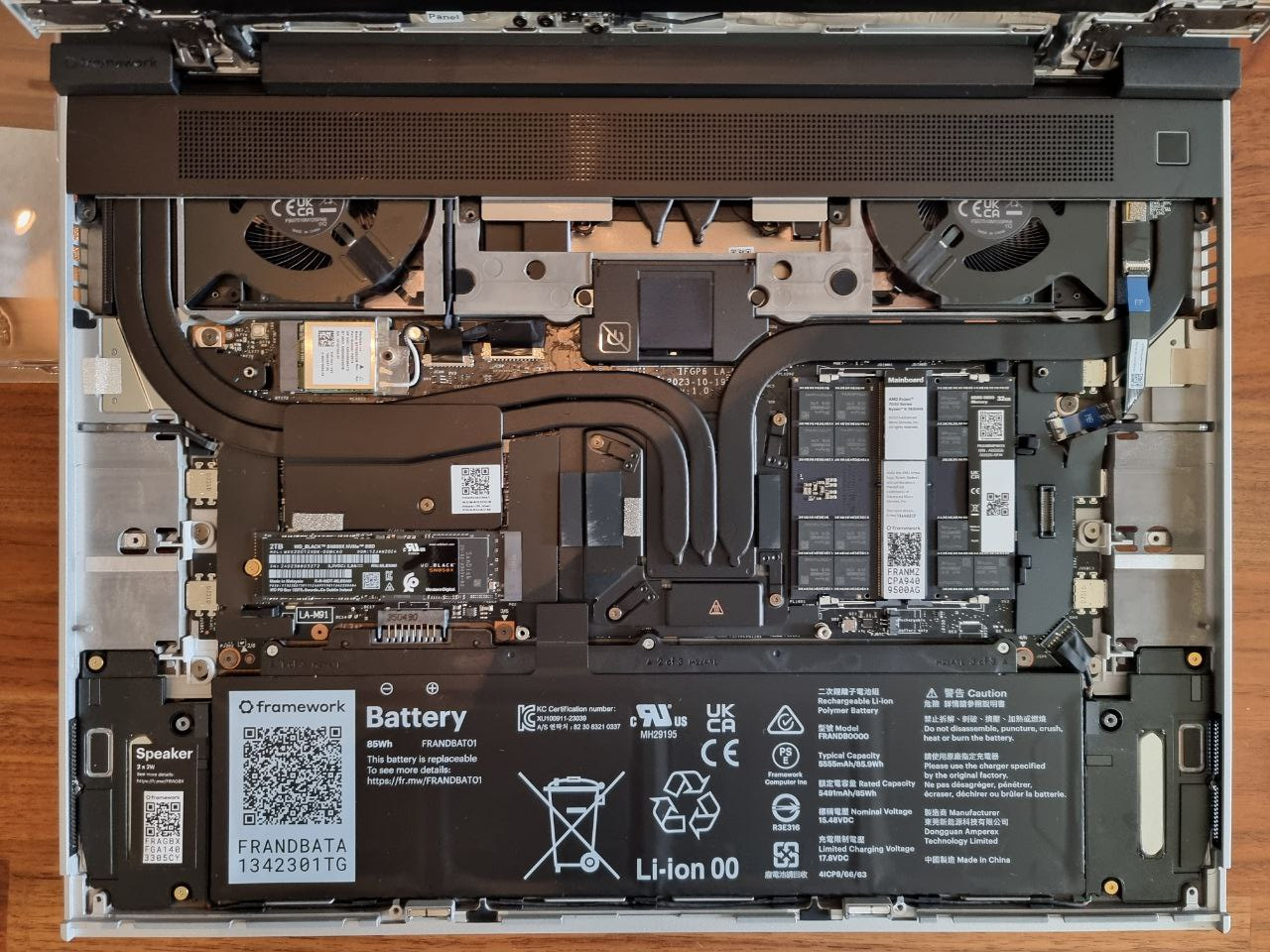

Opting to make my Framework 16 a powerhouse, I chose the following specs:

- CPU: AMD Ryzen 9 7940HS

- GPU: AMD Radeon RX 7700S (utilizing the GPU module extension)

- Memory: 64 GiB of DDR5 RAM

- Storage: 4 TiB SSD

This setup wasn't cheap, but it was a strategic investment, allowing for future partial upgrades if a more powerful CPU or additional memory was needed.

The eGPU Solution

I decided to use Razer Core X Chroma eGPUs—one for the office and one for home.

That's great in theory, but in practice: I only found 1 second-hand Razer Core X Chroma. In Europe it seems impossible to actually buy one, the product has been out-of-stock for months now in the Razer shop. Actually: I can't find any decent eGPU in Europe. Or they're significantly worse than the one made by Razer. (And keep in mind, this design has almost 4 years old too!)

Anyway, I got one for the office. I installed my older RX 5700 XT AMD GPU to handle my three monitors, while at home, I plan to buy another one eventually. This theoretically will create a seamless experience across both environments with the added benefit of powerful graphics processing.

The Hurdle: Multiple GPUs on Linux

Managing multiple GPUs on a Linux machine presents its own set of challenges, particularly because supporting multiple GPUs is still an afterthought of most desktop environments. And the suppor that is there, is mostly to make sure you're working on the dedicated GPU and not the integrated GPU.

So can you see my problem yet? Gnome preferred to use the RX 7700S (the GPU module extension) instead of the RX 5700 XT (the one inside the eGPU).

I didn't even notice at first, because I was actually using the 3 outputs of the RX 5700 XT. There was nothing plugged into the RX 7700S.

But there was this horrible sluggishnesh to the entire system. It wasn't lagging or dropping frames (yet), but it felt very weird. This is the setup:

- Monitor 1: 4K 60Hz

- Monitor 2: 4K 144Hz

- Monitor 3: 4K 60Hz

I thought maybe my middle monitor wasn't running at 144Hz at first, but it actually was. And doing the UFO test proved it. Moving my mouse around like crazy also confirmed it was running properly. Yet the mouse still seemed to lag ever so slightly.

When I started digging, I noticed the laptop was using the RX 7700S module to render everything, and then output it via the RX 5700 XT. And I guess that's a few roundtrips too many causing it to add some latency.

After my system was running for a while, everything started lagging even more. Gnome animations started taking multiple seconds to complete, running at a few frames per second.

Is that also due to the latency? Isn't the RX 7700S technically a more performant GPU then my aging RX 5700 XT? I don't get it, but anyway: I wanted to switch to the RX 5700 XT and see if it would make anything better.

Implementing a Solution

My initial focus was to force GNOME to prioritize the RX 5700 XT. I found the mutter-primary-gpu extension: mutter-primary-gpu. Unfortunately, it was outdated. However, the basis of its operation was relatively simple—adjusting udev rules to tag the preferred GPU.

By creating a udev rule, I forced the system to prefer the RX 5700 XT:

nano /etc/udev/rules.d/61-mutter-primary-gpu.rules

And then I naively added the following lines (just to be clear: these are not correct):

ENV{DEVNAME}=="/dev/dri/card*", TAG="dummytag"

ENV{DEVNAME}=="/dev/dri/card2", TAG+="mutter-device-preferred-primary"

(The first line clears all the tags, the second line adds the "preferred" tag to the one you want)

I thought "I plugged the eGPU in last, so it'll be card 2". Wrong. It kind of differed. Sometimes it did, other times it pointed to the integrated GPU 🤷♂️

Then I thought: I'll use the by-path symlinks! So I went with this:

ENV{DEVNAME}=="/dev/dri/card*", TAG="dummytag"

ENV{DEVNAME}=="/dev/dri/by-path/pci-0000:6a:00.0-card", TAG+="mutter-device-preferred-primary"

That, again, was stupid. Because while /dev/dri/by-path/pci-0000:6a:00.0-card is indeed a symlink to the correct card, the kernel does not care about symlinks. It sees a path, it knows nothing about that path, and ignores it.

Luckily, you can easily target a device by its id:

ENV{DEVNAME}=="/dev/dri/card*", TAG="dummytag"

ENV{ID_PATH}=="pci-0000:6a:00.0", TAG+="mutter-device-preferred-primary"

After reloading the udev rules and triggering them:

udevadm control --reload-rules

udevadm trigger

Finally, I had to restart mutter (and thus the GNOME Shell session) to apply the changes, since you can't switch it while it's running.

The Result: A Smooth Experience

The outcome was a resounding success. The system became buttery smooth, and all lag issues disappeared. Achieving this multi-monitor experience with my powerful Framework 16 laptop turned out to be a dream come true.

Some caveats

I've been running this for almost a month now, and I have encountered a few issues so far. I'm not sure where the source of the problems lie (the Linux kernel, Gnome/mutter, Framework, eGPU, ...), but luckily these aren't too big of a dealbrakers. Here they are:

Booting with the eGPU plugged in doesn't really work

Most of the time, the boot process will just hang. And I have to unplug it & reboot for it to work. Other times it does boot, and the laptop does charge via the cable, but it won't actually use the outputs.

Solution: Always plug the eGPU in after you reach the GDM/KDM/LightDM login screen

Unplugging the eGPU isn't really an option

Unplugging the eGPU causes a lot of issues. Mutter will freak out, but even if you log out of the Gnome-shell session before plugging it out, the entire kernel might freeze. Or at least visually. I've noticed the laptop does actually keep on running, but visually nothing will ever change on any of the monitors. There's no switching to a VT or anything.

Solution: Shut down the laptop when you want to unplug the eGPU 🤷♂️

The laptop is not charging

This is another weird one that has only happened to me about 3 times in the past month now: everything seems to be working fine. The laptop is using the eGPU to render everything. But somehow it's not charging at all. It's not using the power delivery capabilities of the eGPU enclosure.

Solution: No solution, it is super annoying, as you have to unplug the eGPU for it to try again, and as stating in the previous points, that means rebooting. So: make sure at boot that the laptop is getting power.

Comments